Category Archives for Big Data

Enterprise Analytic Solutions 2020

data is the foundation of any meaningful corporate initiative.

Fully master the necessary data and you’re more than halfway to success.

That’s why leverageable (i.e., multiple uses) artifacts of the enterprise data environment are so critical to enterprise success.

Build them once (keep them updated), and use them again many, many times for many and diverse ends.

The data warehouse remains focused strongly on this goal.

And that may be why, nearly 40 years after the first database was labeled a “data warehouse,” analytic database products still target the data warehouse.

This report is the third in a series of enterprise roadmaps addressing cloud analytic databases.

The last two reports focused on comparing vendors on key decision criteria that were targeted primarily at cloud integration.

The vectors represented how well the products provided the features of the cloud that corporate customers have come to expect.

In 2017 we chose products with cloud analytic databases that exclusively deploy in the cloud, or had undergone a major renovation for cloud deployments.

In 2019, we updated that report with the same vendors.

This report is an update to the 2019 Enterprise Roadmap: Cloud Analytic Databases.

However, this time around we have new vendors and a new name.

We’ve reviewed and adjusted our inclusion criteria.

We’re now targeting the technologies that tackle the objectives of an analytics program, as opposed to the means by which they are achieving these objectives.

These days many believe the best vessel to be a data lake/cloud storage (not necessarily in data page/relational-like format).

And many are finding ways to join the relational database with the lake as a “lakehouse,” treating the data lake as external tables.

We have included here viable solutions that don’t have a traditional data warehouse.

Source: gigaom.com

Enterprise Data Governance with Modern Data Catalog Platforms

Data catalogs, a category of product in the broad field of data governance, are emerging in popularity.

That popularity has been brought on by the twin enterprise mandates of complying with data regulations and herding the growing number of repositories in the corporate data estate.

But data catalogs are a legacy product category too, originally stemming from simple data dictionaries – essentially table layouts with plain-English descriptions of tables and fields.

Today’s data catalogs have grown in capabilities, importance, and integration with other tools.

In a nutshell, data catalog platforms help organizations inventory their data by documenting data set content, location, and structure; and aligning business and technical metadata.

Control Helps Enterprise

This organization yields control, and having control helps enterprises:

Achieve Compliance

Achieve compliance with data protection regulations, through documentation and inventory.

End users know where to get data and will avoid duplicating it.

Organizations can control access to entire data sets where necessary and can better enforce role-based access to data subsets within them.

The EU’s General Data Protection Regulation (GDPR) is in effect now, with very strict fines for non-compliance.

The GDPR’s companion ePrivacy Regulation (ePR) may come into effect in 2019, and the California Consumer Protection Act (CCPA) has been passed and goes into effect on January 1, 2020.

These regulations demand the structure and controls that data catalogs provide.

Improve Data Lake ROI

Improve data lake ROI by making data within the lake more discoverable and increasing the lake’s usability in general.

A well-organized, searchable data catalog makes it easy to find relevant data, analyze it, derive insights, and make decisions with greater speed and conviction.

These are the very reasons most enterprises built their data lakes in the first place.

Unify Data Landscape

Unify the data landscape by creating a consolidated volume of information covering data (pool) lake, data warehouse, and operational databases.

Implemented correctly, data catalogs integrate these components through a shared abstraction, helping customers derive new value from older warehouse and operational database assets.

Bring Data & Business Closer

Bring data and the business closer together, by mapping business entity definitions onto data sets and columns within them.

A great data catalog provides a business glossary that helps business users find the data they need within the context of their own concepts, taxonomies, and vocabulary.

The summary, then, is that catalogs protect enterprises from regulatory jeopardy and benefit them by delivering more value from existing assets.

Today’s data catalogs enable collaboration between custodians of the data (“data stewards” in contemporary parlance) and business users by mapping out the organization’s data, which makes it more usable for analysis, and thereby benefits the organization.

The vendors discussed in this report all provide baseline functionality (discussed in the Definition section, below) and each has its own emphasis.

Broadly speaking, the products break down into those that are more governance-focused, and those that have a penchant for enabling self-service analysis in the organization by data enhancing data discoverability and usability.

Within those two broad categories are sub-emphases, detailed in the diagram below.

Figure 1: Data Catalogs: Categories and Priorities

Each of the above designations will become clearer through the course of this report.

Source: gigaom.com

Isn’t It Time to Rethink Your Cloud Strategy?

Last year, at Re: invent, Amazon AWS launched Outpost and finally validated the concept of a hybrid cloud. Not that it was really necessary, but still…

At the same time, what was once defined as a cloud-first strategy (with the idea of starting every new initiative on the cloud often with a single service provider), today is evolving into a multi-cloud strategy.

This new strategy is based on a broad spectrum of possibilities that range from deployments on public clouds to on-premises infrastructures.

Purchasing everything from a single service provider is very easy and solves numerous issues but, in the end, this means accepting a lock-in that doesn’t pay off in the long run.

Last month I was speaking with the IT director of a large manufacturing company in Italy who described how over the last few years his company had enthusiastically embraced one of the major cloud providers for almost every critical company project.

He reported that the strategy had resulted in an IT budget out of control, even when taking into account new initiatives like IoT projects.

The company’s main goal for 2019 is to find a way to regain control by repatriating some applications, building a multi-cloud strategy, and avoiding past mistakes like going “all in” on a single provider.

There Is Multi-Cloud and Multi-Cloud

My recommendation to them was not to merely select a different provider for every project but to work on a solution that would abstract applications and services from the infrastructure.

Meaning that you can buy a service from a provider, but you can also decide to go for raw compute power and storage and build your own service instead.

This service will be optimized for your needs and will be easy to replicate and migrate on different clouds.

Let’s take an example here.

You can have access to a NoSQL database from your provider of choice, or you can decide to build your NoSQL DB service starting from products that are available in the market.

The first is easier to manage, whereas the latter is more flexible and less expensive.

Containers and Kubernetes can make it easier to deploy, manage and migrate from cloud to cloud.

Kubernetes is now available from all major providers in various forms.

The core is the same, and it is pretty easy to migrate from one platform to the other.

And once into containers, you’ll find loads of prepared images and others that can be prepared for every need.

Multi-Cloud Storage

Storage, as always, is a little bit more complicated than computing.

Data has gravity and, as such, is difficult to move; but there are a few tools that come in handy when you plan for multi-cloud.

Block storage is the easiest to move.

It is usually smaller in size, and now there are several tools that can help protect, manage and migrate it — both at the application and infrastructure levels.

There are plenty of solutions.

In fact, almost every vendor now offers a virtual version of its storage appliances that run on the cloud, as well as other tools to facilitate the migration between clouds and on-premises infrastructures.

Think about Pure Storage or NetApp, just to name a couple.

It’s even easier at the application level.

Going back to the NoSQL mentioned earlier, solutions like Rubrik DatosIO or Imanis Data can help with migrations and data management.

Files and objects stores are significantly bigger and, if you do not plan in advance, it could get a bit complicated (but is still feasible).

Start by working with standard protocols and APIs.

Those who choose S3 API for object storage needs will find it very easy to select a compatible storage system both on the cloud and for on-premises infrastructures.

At the same time, many interesting products now allow you to access and move data transparently across several repositories (the list is getting longer by the day but, just to give you an idea, take a look at HammerSpace, Scality Zenko, RedHat Noobaa, and SwiftStack 1Space).

I recently wrote a report for GigaOm about this topic and you can find more here.

The same goes for other solutions.

Why would you stay with a single cloud storage backend when you can have multiple ones, get the best out of them, maintain control over data, and manage it on a single overlaying platform that hides complexity and optimizes data placement through policies?

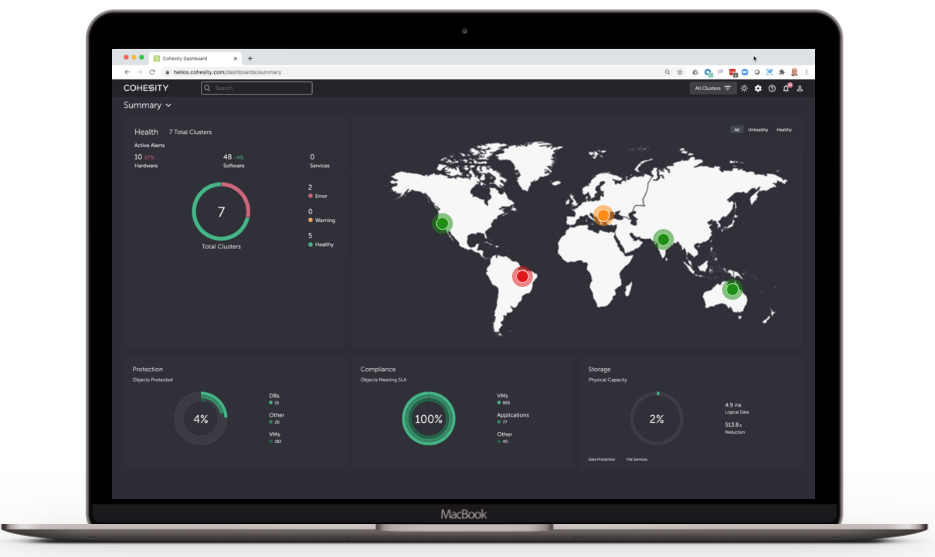

Take a look at what Cohesity is doing to get an idea of what I’m saying here.

The Human Factor of Multi-Cloud

Regaining control of your infrastructure is good from the budget perspective and for the freedom of choice it provides in the long term.

On the other hand, working more on the infrastructure side of things requires an investment in people and their skills.

I’d put this as an advantage, but not everybody thinks this way.

In my personal opinion, it is highly likely that a more skilled team will be able to make better choices, react quicker, and build optimized infrastructures which can give a positive impact on the competitiveness of the entire business but, on the other hand, if the organization is too small it is hard to find the right balance.

Closing the Circle

Amazon AWS, Microsoft Azure, and Google Cloud are building formidable ecosystems and you can decide that it is ok for you to stick with only one of them. Perhaps your cloud bill is not that high and you can afford it anyway.

You can also decide that multi-cloud means multiple cloud silos, but that is a very bad strategy.

Alternatively, there are several options out there to build your Cloud 2.0 infrastructure and maintain control over the entire stack and data.

True, it’s not the easiest path and neither the least expensive at the beginning, but it is the one that will probably pay off the most in the long term and will increase the agility and level of competitiveness of your infrastructure.

This March, on the 26th, I will be co-hosting a GigaOm webinar sponsored by Wasabi on this topic, and there is an interview I recorded not too long ago with Zachary Smith (CEO of Packet) about new ways to think about cloud infrastructures. it is worth a listen if you are interested in knowing more about a different approach to cloud and multi-cloud.

Originally posted on Juku.it

Source: gigaom.com

Data Virtualization: A Spectrum of Approachs – A GigaOm Market Landscape Report

As the appetite for ad-hoc access to both live and historical data rises among business users, the demand stretches the limits of how even the most robust analytics tools can navigate a spiraling universe of datasets.

Satisfying this new order is often constrained by the laws of physics.

Analytics On-demand

Increasingly, the analytics-on-demand phenomenon, borne from an intense focus on data-driven business decision-making, means there is neither time for traditional extract transform and load (ETL) processes nor the time to ingest live data from their source repositories.

Time is not the only factor.

Volume & Speed

The pure volume and speed with which data is generated are beyond the capacity and economic bonds of today’s typical enterprise infrastructures.

While breaking the laws of physics is obviously not in the domain of data professionals, a viable way to work around these physical limitations of querying data is by applying for federated, virtual access.

Data Virtualization (DV) — A Solution

This approach, data virtualization (DV), is a solution a growing number of large organizations are exploring and many have implemented in recent years.

Data Virtualization (DV) — Benefit #1

The appeal of DV is straightforward: by creating a federated tier where information is abstracted, it can enable centralized access to data services.

Data Virtualization (DV) — Benefit #2

In addition, with some DV solutions, cached copies of the data are available, providing the performance of more direct access without the source data having to be rehomed.

Data Virtualization (DV) — Benefit #3

Implementing DV is also attractive because it bypasses the need for ETL, which can be time-consuming and unnecessary in certain scenarios.

Whether under the “data virtualization,” “data fabric,” or “data as a service” moniker, many vendors and customers see it as a core approach to creating logical data warehousing.

Data Virtualization (DV) — Benefit #4

Data virtualization has been around for a while; nevertheless, we are seeing a new wave of DV solutions and architectures that promise to enhance its appeal and feasibility to solve the onslaught of new BI, reporting, and analysis requirements.

Data Virtualization – A Somewhat Nebulous Term

A handful of vendors offer platforms and services that are focused purely on enabling data virtualization and are delivered as such.

Others offer it as a feature in broader big data portfolios.

Regardless, enterprises that implement data virtualization gain this virtual layer over their structured and even unstructured datasets from relational and NoSQL databases, Big Data platforms, and even enterprise applications which allows for the creation of logical data warehouses, accessed with SQL, REST, and other data query methods.

This provides access to data from a broader set of distributed sources and storage formats.

Moreover, DV can do this without requiring users to know where the data resides.

Various Factors Influencing the Need for DV

In addition to the growth of data, increased accessibility of self-service Business Intelligence (BI) tools such as Microsoft’s Power BI, Tableau, and Qlik, are creating more concurrent queries against both structured and unstructured data.

The notion that data is currency, while perhaps cliché, is increasingly and verifiably the case in the modern business world.

Accelerating the growth in data is the overall trend toward digitization, the pools of new machine data, and the ability of analytics tools and machine learning platforms to analyze streams of data from these and other sources, including social media.

Compounding this trend is the growing use and capabilities of cloud services and the evolution of Big Data solutions such as Apache Hadoop and Spark.

Besides ad-hoc reporting and self-service BI demands being bigger than ever, many enterprises now have data scientists whose jobs are to figure out how to make use of all this new data in order to make their organizations more competitive.

The emergence of cloud-native apps, enabled by Docker containers and Kubernetes, will only make analysis features more common throughout the enterprise technology stack.

Meanwhile, the traditional approach of moving and transforming data to meet these needs and power these analytic capabilities is becoming less feasible with each passing requirement.

In this report, we explore data virtualization products and technologies, and how they can help organizations that are experiencing this accelerated demand while simplifying the query process for end-users.

Key Findings:

- Data virtualization is a relatively new option, with still-evolving capabilities for query or searches against transactional and historical data, in near real-time, without having to know where the data resides.

- DV is often optimized for remote access to a cached layer of data, eliminating the need to move the data or allocate storage resources for it.

- DV is an alternative to the more common approach of moving data into warehouses or marts, by ingesting and transforming it using ETL and data prep tools.

- In addition to providing better efficiency and faster access to data, data virtualization can offer a foundation for addressing data governance requirements, such as compliance with the European Union’s General Data Protection Regulation (GDPR), by ensuring data is managed and stored as required by such regulatory regimes.

- Data virtualization often provides the underlying capability for logical data warehouses.

- Many DV vendors are accelerating the capabilities of their solutions by offering the same massively parallel processing (MPP) query capabilities found on conventional data warehouse platforms.

Data Virtualization Products

Products are available across a variety of data virtualization approaches, including:

- (1) core DV platforms;

- (2) standalone SQL query engines that can connect to a variety of remote data sources and can query across them;

- (3) data source bridges from conventional database platforms that connect to files in cloud object storage, big data platforms, and other databases; and

- (4) automated data warehouses.

Source: gigaom.com

From Data Protection to Data Management and Beyond

Just three weeks into 2019, Veeam announced a $500M funding round.

The company is privately held, profitable, and with a pretty solid revenue stream coming from hundreds of thousands of happy customers.

But, still, they raised $500M!

I didn’t see it coming, but if you look at what is happening in the market, it’s not a surprising move.

Market valuation of companies like Rubrik and Cohesity is off the chart and it is pretty clear that while they are spending boatloads of money to fuel their growth, they are also developing platforms that are well beyond traditional data protection.

Backup Is Boring

Backup is one of the most tedious, yet critical, tasks to be performed in the IT space.

You need to protect your data and save a copy of it in a secure place in case of a system failure, human error, or worse, like in the case of natural disasters and cyberattacks.

But as critical as it is, the differentiations between backup solutions are getting thinner and thinner.

Vendors like Cohesity got it right from the very beginning of their existence.

It is quite difficult, if not impossible, to consolidate all your primary storage systems in a single large repository, but if you concentrate backups on a single platform then you have all of your data in a single logical place.

In the past, backup was all about throughput and capacity with very low CPU, and media devices were designed for few sequential data streams (tapes and deduplication appliances are perfect examples).

Why are companies like Rubrik and Cohesity so different then?

Well, from my point of view they designed an architecture that enables them to do much more with backups than what was possible in the past.

Next-gen Backup Architectures

Adding a scale-out file system to this picture was the real game-changer.

Every time you expand the backup infrastructure to store more data, the new nodes also contribute to increasing CPU power and memory capacity.

With all these resources at your disposal, and the data that can be collected through backups and other means, you’ve just built a big data lake … and with all that CPU power available, you are just one step away from transforming it into a very effective big data analytics cluster!

From Data Protection to Analytics and Management

Starting from this background it isn’t difficult to explain the shift that is happening in the market and why everybody is talking more about the broader concept of data management rather than data protection.

Some may argue that it’s wrong to associate data protection with data management and in this particular case the term data management is misleading and inappropriately applied.

But, there is much to be said about it and it could very well become the topic for another post.

Also, I suggest you take a look at the report I recently wrote about unstructured data management to get a better understanding of my point of view.

Data Management for Everybody

Now that we have the tool (a big data platform), the next step is to build something useful on top of it, and this is the area where everybody is investing heavily.

Even though Cohesity is leading the pack and has started showing the potential of this type of architecture years ago with its analytics workbench, the race is open and everybody is working on out-of-the-box solutions.

In my opinion, these out-of-the-box solutions, which will be nothing more than customizable big data jobs with a nice and easy-to-use UI on top, will make data management within everyone’s reach in your organization.

This means that data governance, security, and many business roles will benefit from it.

A Quick Solution Roundup

As mentioned earlier, Cohesity is in a leading position at the moment and they have all the features needed to realize this kind of vision, but we are just at the beginning and other vendors are working hard on similar solutions.

Rubrik, which has a similar architecture, has chosen a different path. They’ve recently acquired Datos IO and started offering NoSQL DB data management.

Even though NoSQL is growing steadily in enterprises, this is a niche use case at the moment and I expect that sooner or later Rubrik will add features to manage data they collect from other sources.

Not long ago I spoke highly about Commvault, and Activate is another great example of their change in strategy.

This is a tool that can be a great companion of their backup solution but can also live alone, enabling the end-user to analyze, get insights and take action on data.

They’ve already demonstrated several use cases in fields like compliance, security, e-discovery, and so on.

Getting back to Veeam … I really loved their DataLabs and what it can theoretically do for data management.

Still not at its full potential, this is an orchestrator tool that allows to take backups, create a temporary sandbox, and run applications against them.

It is not fully automated yet, and you have to bring your own application.

If Veeam can make DataLabs ready to use with out-of-the-box applications it will become a very powerful tool for a broad range of use cases, including e-discovery, ransomware protection, index & search, and so on.

These are only a few examples of course, and the list is getting longer by the day.

Closing the Circle

Data management is now key in several areas.

We’ve already lost the battle against data growth and consolidation, and at this point finding a way to manage data properly is the only way to go.

With ever-larger storage infrastructures under management, and sysadmins that now have to manage petabytes instead of hundreds of terabytes, there is a natural shift towards automation for basic operations and the focus is more on what is really stored in the systems.

Furthermore, with the increasing amount of data, expanding multi-cloud infrastructures, new demanding regulations like GDPR, and ever-evolving business needs, the goal is to maintain control over data no matter where it is stored.

And this is why data management is at the center of every discussion now.

Originally posted on Juku.it

Source: gigaom.com

Enterprise Data Governance with Modern Data Catalog Platforms: A GigaOm Research Byte

Table of Contents

Summary

Data catalogs, a category of product in the broad field of data governance, are emerging in popularity.

That popularity has been brought on by the twin enterprise mandates of complying with data regulations and herding the growing number of repositories in the corporate data estate.

But data catalogs are a legacy product category too, originally stemming from simple data dictionaries – essentially table layouts with plain-English descriptions of tables and fields.

Today’s data catalogs have grown in capabilities, importance, and integration with other tools.

In a nutshell, data catalog platforms help organizations inventory their data by documenting data set content, location, and structure; and aligning business and technical metadata.

This organization yields control, and having control helps enterprises:

- Achieve compliance with data protection regulations, through documentation and inventory.

End users know where to get data and will avoid duplicating it.

Organizations can control access to entire data sets where necessary and can better enforce role-based access to data subsets within them.

The EU’s General Data Protection Regulation (GDPR) is in effect now, with very strict fines for non-compliance.

The GDPR’s companion ePrivacy (ePR) regulation is pending, and the California Consumer Protection Act (CCPA) has been passed and will likely be in effect by the time you read this.

These regulations demand the structure and controls that data catalogs provide.

- Improve data lake ROI by making data within the lake more discoverable and increasing the lake’s usability in general.

A well-organized, searchable data catalog makes it easy to find relevant data, analyze it, derive insights, and make decisions with greater speed and conviction.

These are the very reasons most enterprises built their data lakes in the first place.

- Unify the data landscape by creating a consolidated volume of information covering data lake, data warehouse, and operational databases.

Implemented correctly, data catalogs integrate these components through a shared abstraction, helping customers derive new value from older warehouse and operational database assets.

- Bring data and the business closer together, by mapping business entity definitions onto data sets and columns within them.

A great data catalog provides a business glossary that helps business users find the data they need within the context of their own concepts, taxonomies, and vocabulary.

The summary, then, is that catalogs protect enterprises from regulatory jeopardy and benefit them by delivering more value from existing assets.

Today’s data catalogs enable collaboration between custodians of the data (“data stewards” in contemporary parlance) and business users by mapping out the organization’s data, which makes it more usable for analysis, and thereby benefits the organization.

The vendors discussed in this report all provide baseline functionality (discussed in the Definition section, below) and each has its own emphasis.

Broadly speaking, the products break down into those that are more governance-focused, and those that have a penchant for enabling self-service analysis in the organization by data enhancing data discoverability and usability.

Within those two broad categories are sub-emphases, detailed in the diagram below.

Figure 1: Data Catalogs: Categories and Priorities

Each of the above designations will become clearer through the course of this report.

Source: gigaom.com

Strategies for Moving from Application to Enterprise Customer Master Data Management

Many organizations start their Master Data Management (MDM) journey in support of a specific sponsor business need.

Often hard-fought concessions are made to allow MDM techniques to support the data needs of a particular function.

Now, since almost every application needs your customer master, it’s time to expand the scope of MDM, replace substandard methods of accessing customer data in the enterprise, and establish the MDM hub for use by new applications across all customer business processes.

Crossing the chasm to the enterprise customer 360 can be daunting. During this 1-Hour Webinar, GigaOm analyst William McKnight will share some strategies for moving from application to enterprise customer MDM and will discuss the ruggedization that must exist in MDM to be ready.

During this 1-Hour Webinar we will examine:

- How MDM is established with TCO

- The MDM Maturity Model (single application MDM is low)

- Elements in MDM necessary for takeoff:

- A data quality program (prove data quality according to beloved peers)

- DaaS SLAs – how do they work with the hub?

- Operational integration (including data lake) AND value-added customer analytical elements (perhaps gleaned from transactional data)

- Syndicated data take-on

- Graph capabilities

About William McKnight

William McKnight has advised many of the world’s best-known organizations.

His strategies form the information management plan for leading companies in various industries.

He is a prolific author and a popular keynote speaker and trainer.

He has performed dozens of benchmarks on the leading database, streaming, and data integration products in the past year.

William is the lead data analyst for GigaOM and is the #1 global influencer in data warehousing and master data management and he leads McKnight Consulting Group, which has placed on the Inc. 5000 list in 2018 and 2017.

Source: gigaom.com

Five Questions For… Seong Park at MongoDB

MongoDB came onto the scene alongside a number of data management technologies, all of which emerged on the basis of: “You don’t need to use a relational database for that.”

Back in the day, SQL-based approaches became the only game in town first due to the way they handled storage challenges, and then a bunch of open source developers came along and wrecked everything.

So we are told.

Having firmly established itself in the market and proved that it can deliver scale (Fortnite is a flagship customer), the company is nonetheless needing to move with the times.

Having spoken to Seong Park,

VP of Product Marketing & Developer Advocacy, several times over the past 6 weeks, I thought it was worth capturing the essence of our conversations.

Object-focused Approach to Database

Q1: How do you engage with developers that are the same, or different from data-oriented engineers? Traditionally these have been two separate groups to be treated separately, is this how you see things?

A1: MongoDB began as the solution to a problem that was increasingly slowing down both developers and engineers: the old relational database simply wasn’t cutting the mustard anymore.

And that’s hardly surprising since the design is more than 40 years old.

MongoDB’s entire approach is about driving developer productivity, and we take an object-focused approach to databases.

You don’t think of data stored across tables, you think of storing info that’s associated, and you keep it together.

That’s how our database works.

We want to make sure that developers can build applications.

That’s why we focus on offering uncompromising user experiences.

Our solution should be as easy, seamless, simple, effective, and productive as possible.

We are all about enabling developers to spend time on the things they care about: developing, coding, and working with data in a fast, natural way.

When it comes to DevOps, a core tenet of the model is to create multi-disciplinary teams that can collectively work in small squads, to develop and iterate quickly on apps and microservices.

Increasingly, data engineers are a part of that team, along with developers, operations staff, security, product managers, and business owners.

We have built capabilities and tools to address all of those groups.

For data engineers, we have in-database features such as the aggregation pipeline that can transform data before processing.

We also have connectors that integrate MongoDB with other parts of the data estate – for example, from BI to advanced analytics and machine learning.

MongoDB – An Enabler of DevOps

Q2: Database structures such as MongoDB are an enabler of DevOps practices; at the same time, data governance can be a hindrance to speed and agility. How do you ensure you help speed things up, and not slow them down?

A2: Unlike other non-relational databases, MongoDB gives you a completely tunable schema – the skeleton representing the structure of the entire database.

The benefit here is that the development phase is supported by a flexible and dynamic data model, and when the app goes into production, you can enforce schema governance to lock things down.

The governance itself is also completely tunable, so you can set up your database to support your needs, rather than being constrained by structure.

This is an important differentiator for MongoDB.

Another major factor that reduces speed and agility is scale.

Over the last two to three years, we have been building mature tooling that enterprises and operators alike will care about, because they make it easy to manage and operate MongoDB, and because they make it easy to apply upgrades, patches, and security fixes, even when you’re talking about hundreds of thousands of clusters.

One of the key reasons why we have seen such acceleration in the adoption of MongoDB, not only in the enterprise but also by startups and smaller businesses, is that we make it so easy to get started with MongoDB.

We want to make it easy to get to market very quickly, while we’re also focusing on driving down costs and boosting productivity.

Our approach is to remove as much friction in the system as possible, and that’s why we align so well with DevOps practices.

In terms of legacy modernization, we are running a major initiative enabling customers to apply the latest innovations in development methodologies, architectural patterns, and technologies to refresh their portfolio of legacy applications.

This is much more than just “lift and shift”.

Moving existing apps and databases to faster hardware, or onto the cloud might get you slightly higher performance and marginally reduced cost, but you will fail to realize the transformational business agility, scale, or deployment freedom that true legacy modernization brings.

In our experience, by modernizing with MongoDB organizations can build new business functionality 3-5x faster, scale to millions of users wherever they are on the planet, and cut costs by 70 percent and more, all by unshackling from legacy systems.

Do We Need Database Engineers?

Q3: Traditionally you’re either a developer or a database person … does this do away with database engineers? Do we need database engineers or can developers do everything?

A3: Developers are now the kingmakers; they are the hardest group of talent to retain.

The biggest challenge most enterprises see is about finding and keeping developer talent.

If you are looking for the best experience in working with data, MongoDB is the answer in our opinion!

It is not just about the persistence and the database …MongoDB Stitch is a serverless platform, drives integration with third-party cloud services, and enables event-based programming through Stitch triggers.

Ultimately, it comes down to a data platform that any number of roles can use, in their “swim lanes”.

With the advent of the cloud, it’s so easy for customers not to have to worry about things they did before since they consume a pay-as-you-go service.

Maybe you don’t need a DBA for a project anymore: it’s important to allow our users to consume MongoDB in the easiest way possible.

But the bottom line is that we’re not doing away with database engineers, but shifting their role to focus on making a higher-value impact.

For engineers we have capabilities and features like the aggregation pipeline, allowing us to transform data before processing.

MongoDB in IoT

Q4: IoT-related question … in retail, you want to put AI into the supermarket environment, it could be video surveillance or inventory management. It’s not about distributing across crowd but into the Edge and “fog” computing…

A4: At our recent MongoDB Europe event in London, we announced the general availability of MongoDB Mobile as well as the beta for Stitch Mobile Sync.

Since we already have a lot of customers on the network edge (you’ll find MongoDB on oil rigs, across the IoT, used by airlines, and for the management of fleets of cars and trucks), a lot of these elements are already there.

The advantage is how easy we make it is to work with that edge data.

We’re thinking about the experience we provide in terms of working with data – and giving people access to what they care about – tooling, integration, and look at what MongoDB can provide natively on a data platform.

Cloud-native

Q5: I’m interested to know what proportion of your customer base, and/or data/transaction base, are ‘cloud native’ versus more traditional enterprises. Indeed, is this how you segment your customers, and how do you engage with different groups that you do target?

A5: We’d argue that every business should become cloud-native – and many traditional enterprises are on that journey.

Around 70 percent of all MongoDB deployments are on a private or public cloud platform, and from a product portfolio perspective, we work to cover the complete market – from startup programs to self-service cloud services, to corporate and enterprise sales teams.

As a result, we can meet customers wherever they are, and whatever their size.

My take: better ways exist, but how to preach to the non-converted?

Much that we see around us in technology is shaped as a result of the constraints of its time.

Relational databases enabled a step up from the monolithic data structures of the 1970s (though of course, some of the latter are still running, quite successfully), in no small part by enabling more flexible data structures to exist.

MongoDB took the same idea one step further, doing away with the schema completely.

Is the MongoDB model the right answer for everything?

No, and that would never be the point – nor are relational models, nor any other data management structures (including the newer capabilities in MongoDB’s stable).

Given that data management vendors will continue to innovate, more important is choosing the right tool for the job, or indeed, being able to move from one model to another if need be.

This is more about mindset, therefore. Traditional views of IT have been to use the same technologies and techniques because they always worked before.

Not only does this risk trying to put square pegs in round holes, but also it can mean missed opportunities if the definition of what is possible is constrained by what is understood.

I would love to think none of this needs to be said, but in my experience, large organizations still look backward more than they look forward, to their loss.

We often talk about skills in data science, the shortage of developers, and so on, but perhaps the greater gap is in senior executives that get the need for an engineering-first mindset.

If we are all software companies now, we need to start acting accordingly.

Source: gigaom.com

Flowing Data to Drive Digital: A Plan for CIOs to Unlock Data Fast, Efficiently, and Compliantly

Data is the new corporate asset class.

It’s instrumental to virtually every digital initiative.

Fresh test data is a vital pillar for cloud migrations.

DevOps teams rely on realistic data for building new apps.

AI/ML models are only as accurate as of the information that feeds them.

But like any asset, its value isn’t static. It has a “half-life”—the relevance of data quickly decays over time.

The problem is that data is everywhere, growing and changing rapidly, and locked down by regulations like GDPR and other privacy rules. It’s enough to put powerful brakes on the best digital intentions.

This is why digital leaders are looking to empower data consumers to tap into the information they need—fast, efficiently, and compliantly.

That means rethinking the people, processes, and technology so that data can finally flow.

This webinar features Simon Gibson, CISO and Gigaom analyst, as well as Eric Schrock, CTO from Delphix. Simon and Eric will be discussing how to free data to drive innovation.

Join us for this free 1-hour webinar from GigaOm Research as we cover the following topics:

- Let’s level-set. In terms of value, how big is the stockpile of data that enterprises are holding?

- What are some of the challenges enterprises face when unlocking the data potential?

- What are the top cybersecurity, risk, and governance issues around unlocking data and how are enterprises addressing them?

- What are the best strategies or approaches to navigating the opportunities?

- What are the best strategies or approaches to navigating the challenges?

- Data is everywhere and overwhelming; where are enterprises starting?

- What are two to three lessons learned from enterprises that have already started to navigate these waters?

- How do cloud and/or external data sources throw complexity into the equation?

Register now to join GigaOm Research and Delphix for this free expert webinar.

Source: gigaom.com

Revenge of the Data Warehouse: How a Classic Tech Category Has Evolved, and Triumphed

The pressure to leverage data as a business asset is stronger than ever. Enterprises everywhere are eager to devise sound data strategies that are realistic and achievable, based on available budget, and sensitive to in-house technology skill sets.

For a while, it looked like open source, specialized big data compute frameworks, including Hadoop and Spark, were the way to go.

Enterprise organizations found them compelling for reasons of novelty, economics, and the apparent prudence of a future-looking technology.

But those frameworks are at a bit of a crossroads: the hype around them has subsided and — while things are improving — the success rate of enterprise projects involving them has been modest.

Meanwhile, the data warehouse (DW) which, for decades, has been a key technology platform for enterprise analytics, never went away.

Yes, DWs struggled and incumbent DW platforms still do, but recent advances in storage costs and compute scalability, especially in the cloud, have addressed the most important challenges faced by DW platforms.

As a result, we are in a DW renaissance period.

Problems with DWs have largely been solved, petabyte-scale data volumes no longer defeat them and the familiarity and ease of use that kept them viable all this time are now helping them face their open source big data competition and, in many cases, emerge victorious.

Still, if DW platforms have changed, what should enterprises do to build an analytics strategy that integrates them?

Even the most DW-loyal shops will need to look at how DW platforms have evolved and adjust their strategies accordingly.

Organizations that have committed to open source analytics technologies will need to take a second look at DW platforms and consider a strategy that combines both, essentially bringing the data warehouse together with the data lake.

The trick to adapting to the new world of data warehousing is understanding that it is not only the technology that has changed but the applications and use cases for DW technology as well.

DW platforms do not need to be used exclusively for Enterprise DW implementations.

The platforms are now more versatile and can be used for use-case-specific workloads and even exploratory analytics.

In a sense, the DW isn’t just a DW anymore.

Even the juxtaposition of DW and Data Lake has shifted – DW platforms today are increasingly able to ingest raw, semi-structured data, or query it in place.

This means warehouse and lake technology can be used in combination and, sometimes, lake technology will not be necessary.

It is not just the Data Lake and its workloads that are becoming more integrated into the warehouse.

Streaming data, machine learning, and AI are onboarding as well.

In addition, data governance and data protection are starting to enter the DW orbit.

While the familiarity of the relational model, dimensional design, and SQL are back; the application of DW technology now covers territory that may be less familiar.

Moreover, new vendors who have championed or been born in the cloud are emerging in leadership positions.

The new capabilities, new use cases, and new vendors make the space exciting but also difficult to navigate (for newbies and veterans alike).

Enterprise customers will need to understand how DW platforms have morphed and shape-shifted; how best to use, deploy and implement them; combine them with other applications; and understand the key differences between the vendors and their offerings.

Without this knowledge, Enterprise buyers will be frozen in indecision.

With it, they will be armed to leverage today’s DW platforms to their fullest and cherry-pick other technologies that can augment and optimize them.

The end result is that organizations in the know will be ready to analyze all their data, in technologically familiar environments, for full competitive and operational advantage.

In this report, we map out today’s DW landscape in the context of where the technology has been, where it is, and where it is going.

Key Findings:

- The Data Warehouse is alive and well, perhaps enjoying its biggest popularity wave to date

- Open source technology challengers addressed issues of storage costs and horizontal scalability but did not achieve parity in terms of enterprise skill-set abundance, interactive query performance, or acting as authoritative data repositories

- The advent of the cloud has helped DW platforms transcend their vulnerabilities, through the cloud’s power of elasticity for compute and the use of economical, infinitely scalable cloud object storage

- Transcending their vulnerabilities and satisfying customers’ need for familiar SQL-relational platform paradigms has turned out to be a one-two punch for DWs

- Customers need to bring themselves up-to-date on the latest DW innovations and product categories, understanding each in the context of the DW’s historical evolution and market factors

- Customers must correlate DW product categories with corporate cloud (and multi-cloud) strategy

Source: gigaom.com